Twitter is a Private Company

paulsurovell said:

ridski said:

paulsurovell said:

Why do you think the AP tweet and the Stanford list were "ended"?

This Stanford list?

https://s.wsj.net/public/resources/documents/stanfordlanguage.pdf

It was answered here: https://news.stanford.edu/report/2023/01/04/it-website-in-the-news/

But that answer was rejected by one of the guys who I asked the question (who liked your post)

nohero is correct. The Wall St Journal lied about the purpose of the document which had been out for 6 months before they reported on it, and as such people got all fired up about nothing and a well-intentioned document had to be removed. External pressure from people who got their fee-fees hurt by those lies forced them to remove it, ergo the list was canceled by an act of over-the-top-wokeness.

ridski said:

paulsurovell said:

ridski said:

paulsurovell said:

Why do you think the AP tweet and the Stanford list were "ended"?

This Stanford list?

https://s.wsj.net/public/resources/documents/stanfordlanguage.pdf

It was answered here: https://news.stanford.edu/report/2023/01/04/it-website-in-the-news/

But that answer was rejected by one of the guys who I asked the question (who liked your post)

nohero is correct. The Wall St Journal lied about the purpose of the document which had been out for 6 months before they reported on it, and as such people got all fired up about nothing and a well-intentioned document had to be removed. External pressure from people who got their fee-fees hurt by those lies forced them to remove it, ergo the list was canceled by an act of over-the-top-wokeness.

So you also reject the "answer" that you offered.

Good of you to point out that the document was "well-intentioned" as it serves to remind us of history's warnings about the limitations of "good intentions".

PVW said:

It's unclear where Paul draws the line between permissible government requests to private organizations and first amendment rights. The actions published in the twitter files don't strike me as obviously crossing the line, and I can't tell if Paul (and others making hay out of the twitter files) have some well-defined line themselves.

I learned there's actually a term for this murky area of first amendment law:

https://www.lawfareblog.com/informal-government-coercion-and-problem-jawboning

I've started following @Popehat on social media -- I think that's where I learned that term from. Thought there was an article he shared at some point on this - can't locate it now, might have been the above.

One doesn't have to conclude that government efforts to censor and suppress speech on Twitter is a violation of the First Amendment to appreciate that the Twitter Files have taught us that our government is actively involved in such efforts.

I recommend (again) the Matt Bivens article:

paulsurovell said:

One doesn't have to conclude that government efforts to censor and suppress speech on Twitter is a violation of the First Amendment to appreciate that the Twitter Files have taught us that our government is actively involved in such efforts.

I recommend (again) the Matt Bivens article:

gullibility reigns supreme

If it's not the First Amendment you're looking to as a guidepost, then that leaves open the question on exactly what your line is. Is any government interaction with private organizations off limits? That'd be a radically unworkable position, and one I don't believe you hold. But you also seem unable to articulate the position you do hold. What determines acceptable and unacceptable government interaction? How should that be balanced against concerns such as public safety, if at all?paulsurovell said:

PVW said:

It's unclear where Paul draws the line between permissible government requests to private organizations and first amendment rights. The actions published in the twitter files don't strike me as obviously crossing the line, and I can't tell if Paul (and others making hay out of the twitter files) have some well-defined line themselves.

I learned there's actually a term for this murky area of first amendment law:

https://www.lawfareblog.com/informal-government-coercion-and-problem-jawboning

I've started following @Popehat on social media -- I think that's where I learned that term from. Thought there was an article he shared at some point on this - can't locate it now, might have been the above.

One doesn't have to conclude that government efforts to censor and suppress speech on Twitter is a violation of the First Amendment to appreciate that the Twitter Files have taught us that our government is actively involved in such efforts.

I recommend (again) the Matt Bivens article:

PVW said:

If it's not the First Amendment you're looking to as a guidepost, then that leaves open the question on exactly what your line is. Is any government interaction with private organizations off limits? That'd be a radically unworkable position, and one I don't believe you hold. But you also seem unable to articulate the position you do hold. What determines acceptable and unacceptable government interaction? How should that be balanced against concerns such as public safety, if at all?paulsurovell said:

PVW said:

It's unclear where Paul draws the line between permissible government requests to private organizations and first amendment rights. The actions published in the twitter files don't strike me as obviously crossing the line, and I can't tell if Paul (and others making hay out of the twitter files) have some well-defined line themselves.

I learned there's actually a term for this murky area of first amendment law:

https://www.lawfareblog.com/informal-government-coercion-and-problem-jawboning

I've started following @Popehat on social media -- I think that's where I learned that term from. Thought there was an article he shared at some point on this - can't locate it now, might have been the above.

One doesn't have to conclude that government efforts to censor and suppress speech on Twitter is a violation of the First Amendment to appreciate that the Twitter Files have taught us that our government is actively involved in such efforts.

I recommend (again) the Matt Bivens article:

He can't answer your question. He has bought into the "Twitter files" narrative just as surely as he bought into the "Russia hoax".

drummerboy said:

paulsurovell said:

One doesn't have to conclude that government efforts to censor and suppress speech on Twitter is a violation of the First Amendment to appreciate that the Twitter Files have taught us that our government is actively involved in such efforts.

I recommend (again) the Matt Bivens article:

gullibility reigns supreme

Coincidentally I just posted a collage about (your) supreme gullibility on the "whatabout" thread.

PVW said:

If it's not the First Amendment you're looking to as a guidepost, then that leaves open the question on exactly what your line is. Is any government interaction with private organizations off limits? That'd be a radically unworkable position, and one I don't believe you hold. But you also seem unable to articulate the position you do hold. What determines acceptable and unacceptable government interaction? How should that be balanced against concerns such as public safety, if at all?paulsurovell said:

PVW said:

It's unclear where Paul draws the line between permissible government requests to private organizations and first amendment rights. The actions published in the twitter files don't strike me as obviously crossing the line, and I can't tell if Paul (and others making hay out of the twitter files) have some well-defined line themselves.

I learned there's actually a term for this murky area of first amendment law:

https://www.lawfareblog.com/informal-government-coercion-and-problem-jawboning

I've started following @Popehat on social media -- I think that's where I learned that term from. Thought there was an article he shared at some point on this - can't locate it now, might have been the above.

One doesn't have to conclude that government efforts to censor and suppress speech on Twitter is a violation of the First Amendment to appreciate that the Twitter Files have taught us that our government is actively involved in such efforts.

I recommend (again) the Matt Bivens article:

I'm speaking specifically about government attempts to interfere with what information Americans have access to and what they can speak about, when no laws are being broken. That doesn't preclude the government's right to speak to the public or to take action when laws are being broken.

The Twitter Files reveal a massive effort by government agencies and officials to interfere with speech and information where no laws were claimed to have been broken.

paulsurovell said:

I'm speaking specifically about government attempts to interfere with what information Americans have access to and what they can speak about, when no laws are being broken. That doesn't preclude the government's right to speak to the public or to take action when laws are being broken.

The Twitter Files reveal a massive effort by government agencies and officials to interfere with speech and information where no laws were claimed to have been broken.

oh, they reveal no such thing.

paulsurovell said:

One doesn't have to conclude that government efforts to censor and suppress speech on Twitter is a violation of the First Amendment to appreciate that the Twitter Files have taught us that our government is actively involved in such efforts.

I recommend (again) the Matt Bivens article:

The Matt Bivens article has a lot of breathless promises of how significant the revelations are, but then doesn't deliver.

paulsurovell said:

I'm speaking specifically about government attempts to interfere with what information Americans have access to and what they can speak about, when no laws are being broken. That doesn't preclude the government's right to speak to the public or to take action when laws are being broken.

The Twitter Files reveal a massive effort by government agencies and officials to interfere with speech and information where no laws were claimed to have been broken.

If you're speaking specifically, then be specific. I mean, I could go through the Biven article and find the least convincing example, you could respond saying that's not what you meant, etc -- but seems it'd be much better all around for you to just cite a specific instance up front you believe best demonstrates your claim.

paulsurovell said:

So you also reject the "answer" that you offered.

Good of you to point out that the document was "well-intentioned" as it serves to remind us of history's warnings about the limitations of "good intentions".

LOL

ridski said:

paulsurovell said:

So you also reject the "answer" that you offered.

Good of you to point out that the document was "well-intentioned" as it serves to remind us of history's warnings about the limitations of "good intentions".

LOL

Highly unlikely that he even looked at any of the background information on it, and just went with what the haters said.

Paul’s idea of hell, the road to which is paved with over-the-top (TM) wokeness, is a poor cousin to Dante’s.

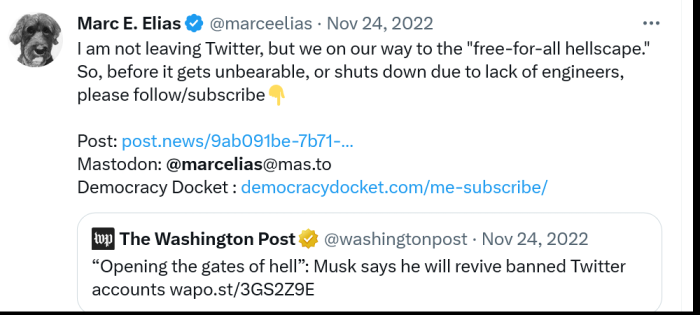

Mr. Surovell's side is apparently getting its way here.

The College Board Strips Down Its A.P. Curriculum for African American Studies

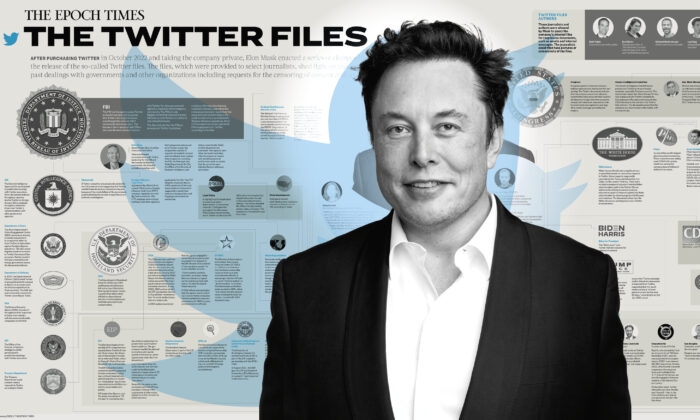

Who better than the "Epoch Times" to be your guide to "The Twitter Files"?

INFOGRAPHIC: Key Revelations of the ‘Twitter Files’ (theepochtimes.com)

DaveSchmidt said:

Paul’s idea of hell, the road to which is paved with over-the-top (TM) wokeness, is a poor cousin to Dante’s.

Paved road vs trackless wood.

PVW said:

Paved road vs trackless wood.

If it weren’t for the U.S., Lucifer never would have fallen.

DaveSchmidt said:

Paul’s idea of hell, the road to which is paved with over-the-top (TM) wokeness, is a poor cousin to Dante’s.

Good intentions can lead to bad results far more benign than "hell" or even a "hellscape," as we've seen with the Stanford students and the AP Tweeter.

DaveSchmidt said:

PVW said:

Paved road vs trackless wood.

If it weren’t for the U.S., Lucifer never would have fallen.

There's a new Lucifer in town.

nohero said:

Mr. Surovell's side is apparently getting its way here.

The College Board Strips Down Its A.P. Curriculum for African American Studies

@nohero striving to emulate his heroes.

paulsurovell said:

nohero said:

Mr. Surovell's side is apparently getting its way here.

The College Board Strips Down Its A.P. Curriculum for African American Studies

@nohero striving to emulate his heroes.

When did you stop taking DeSantis' side on "CRT"?

nohero said:

paulsurovell said:

nohero said:

Mr. Surovell's side is apparently getting its way here.

The College Board Strips Down Its A.P. Curriculum for African American Studies

@nohero striving to emulate his heroes.

When did you stop taking DeSantis' side on "CRT"?

Show me where your so-called brain thinks I started.

nohero said:

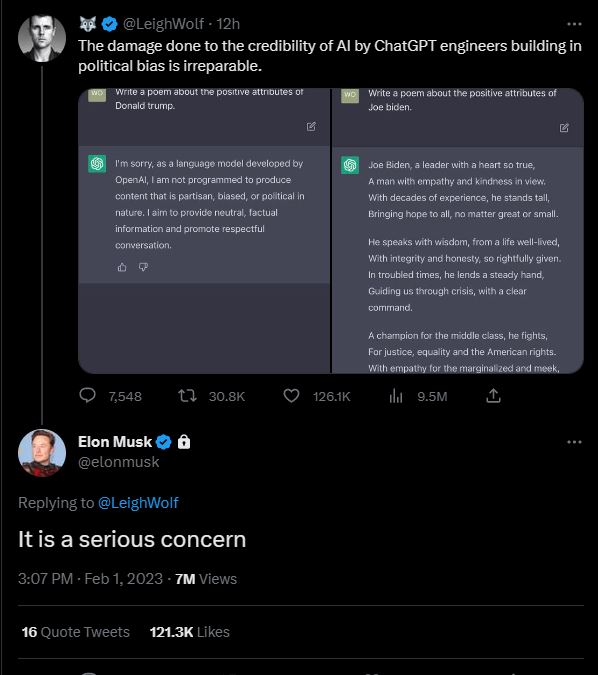

Damn, now it's woke A.I. that Elon has us worried about.

It's AI in general.

Elon, along with many other experts, has been warning about it for years.

Elon Musk calls for ban on killer robots before ‘weapons of terror’ are unleashed

By Peter Holley

August 21, 2017 at 4:30 p.m. EDT

Tesla chief executive Elon Musk has said that artificial intelligence is more of a risk to the world than is North Korea, offering humanity a stark warning about the perilous rise of autonomous machines.Now the tech billionaire has joined more than 100 robotics and artificial intelligence experts calling on the United Nations to ban one of the deadliest forms of such machines: autonomous weapons.

“Lethal autonomous weapons threaten to become the third revolution in warfare,” Musk and 115 other experts, including Alphabet’s artificial intelligence expert, Mustafa Suleyman, warned in an open letter released Monday. “Once developed, they will permit armed conflict to be fought at a scale greater than ever, and at time scales faster than humans can comprehend.”

According to the letter, “These can be weapons of terror, weapons that despots and terrorists use against innocent populations, and weapons hacked to behave in undesirable ways.”

The letter — which included signatories from dozens of organizations in nearly 30 countries, including China, Israel, Russia, Britain, South Korea and France — is addressed to the U.N. Convention on Certain Conventional Weapons, whose purpose is restricting weapons “considered to cause unnecessary or unjustifiable suffering to combatants or to affect civilians indiscriminately,” according to the U.N. Office for Disarmament Affairs. It was released at an artificial intelligence conference in Melbourne, Australia, ahead of formal U.N. discussions on autonomous weapons. Signatories implored U.N. leaders to work hard to prevent an autonomous weapons “arms race” and “avoid the destabilizing effects” of the emerging technology.

In a report released this summer, Izumi Nakamitsu, the head of the disarmament affairs office, said that technology is advancing rapidly but that regulation has not kept pace. She pointed out that some of the world’s military hot spots already have intelligent machines in place, such as “guard robots” in the demilitarized zone between South and North Korea.

For example, the South Korean military is using a surveillance tool called the SGR-AI, which can detect, track and fire upon intruders. The robot was implemented to reduce the strain on thousands of human guards who man the heavily fortified, 160-mile border. While it does not operate autonomously yet, it does have the capability to, according to Nakamitsu.

“The system can be installed not only on national borders, but also in critical locations, such as airports, power plants, oil storage bases and military bases,” says a description in a video released by Samsung, which makes the SGR-AI.

“There are currently no multilateral standards or regulations covering military AI applications,” Nakamitsu wrote. “Without wanting to sound alarmist, there is a very real danger that without prompt action, technological innovation will outpace civilian oversight in this space.”

According to Human Rights Watch, autonomous weapons systems are being developed in many of the nations represented in the letter — “particularly the United States, China, Israel, South Korea, Russia and the United Kingdom.” The concern, the organization says, is that people will become less involved in the process of selecting and firing on targets as machines lacking human judgment begin to play a critical role in warfare. Autonomous weapons “cross a moral threshold,” HRW says.

“The humanitarian and security risks would outweigh any possible military benefit,” HRW argues. “Critics dismissing these concerns depend on speculative arguments about the future of technology and the false presumption that technical advances can address the many dangers posed by these future weapons.”

In recent years, Musk’s warnings about the risks posed by AI have grown increasingly strident — drawing pushback in July from Facebook chief executive Mark Zuckerberg, who called Musk’s dark predictions “pretty irresponsible.” Responding to Zuckerberg, Musk said his fellow billionaire’s understanding of the threat post by artificial intelligence “is limited.”Last month, Musk told a group of governors that they need to start regulating artificial intelligence, which he called a “fundamental risk to the existence of human civilization.” When pressed for concrete guidance, Musk said the government must get a better understanding of AI before it’s too late.

“Once there is awareness, people will be extremely afraid, as they should be,” Musk said. “AI is a fundamental risk to the future of human civilization in a way that car accidents, airplane crashes, faulty drugs or bad food were not. They were harmful to a set of individuals in society, but they were not harmful to individuals as a whole.”

Ok, I'm going to let you in on a secret. AI is a threat to humanity, but not the way you think. See, the way it works is that the rich and powerful will use AI to increase their wealth and power at the expense of everyone else. But -- and here's the trick -- they'll hide it all behind "AI." Rent going up? It's not greedy corporate landlords, it's just a pure unbiased decision by the algorithm. Can't get credit at a decent rate to invest in education, or just buy a car? Ah, that's just the algorithm again. Wrongfully arrested because the system flagged you as having the same face as someone else? Don't be so black. Also, not our fault -- that's the algorithm.

That's all just going to get worse as our algorithms seem smarter. Oh, yeah, we have no idea how it works, it's a black box and, hey, maybe it's even sentient, so don't blame us, blame the algorithm!

I mean, really, we've seen this play before, many times. Take some new technology, use it to hide what's going on and deflect blame, profit. Sometimes it's legal technology -- Mitt Romney was right when he said that corporations are people -- they're rich people, using the legal machine called a "corporation" to minimize risk and duck accountability while taking all the profit. AI will be the same.

I'm not worried about AI because it's going to surpass humanity. I'm worried because it won't.

paulsurovell said:

nohero said:

Damn, now it's woke A.I. that Elon has us worried about.

It's AI in general.

Elon, along with many other experts, has been warning about it for years.

Elon Musk calls for ban on killer robots before ‘weapons of terror’ are unleashedClick to Read More

do you have the slightest clue that Musk/Tesla is a major developer of AI for it's "self-driving" cars?

how do you reconcile that?

so embarrassing. all you do is dig holes for yourself.

don't know where to put this, so here it is.

How can anyone possibly take GG seriously after something like this? Isn't there some point where he's jumped the shark?

lmao pic.twitter.com/7FHYrdNzW1

— Rick Carp (@rick_carp) February 1, 2023

Rentals

Sponsored Business

Promote your business here - Businesses get highlighted throughout the site and you can add a deal.

It's unclear where Paul draws the line between permissible government requests to private organizations and first amendment rights. The actions published in the twitter files don't strike me as obviously crossing the line, and I can't tell if Paul (and others making hay out of the twitter files) have some well-defined line themselves.

I learned there's actually a term for this murky area of first amendment law:

https://www.lawfareblog.com/informal-government-coercion-and-problem-jawboning

I've started following @Popehat on social media -- I think that's where I learned that term from. Thought there was an article he shared at some point on this - can't locate it now, might have been the above.